Introducing A New Workflow in Unity: Integrating Model Tracking Has Never Been Easier

With the most recent version 3.0, you have access to an even more robust and convenient solution: you can now include object tracking into your own AR/XR platforms more quickly and effectively.

A thorough optimization of the algorithms for stability, memory usage, and runtime performance completes the update.

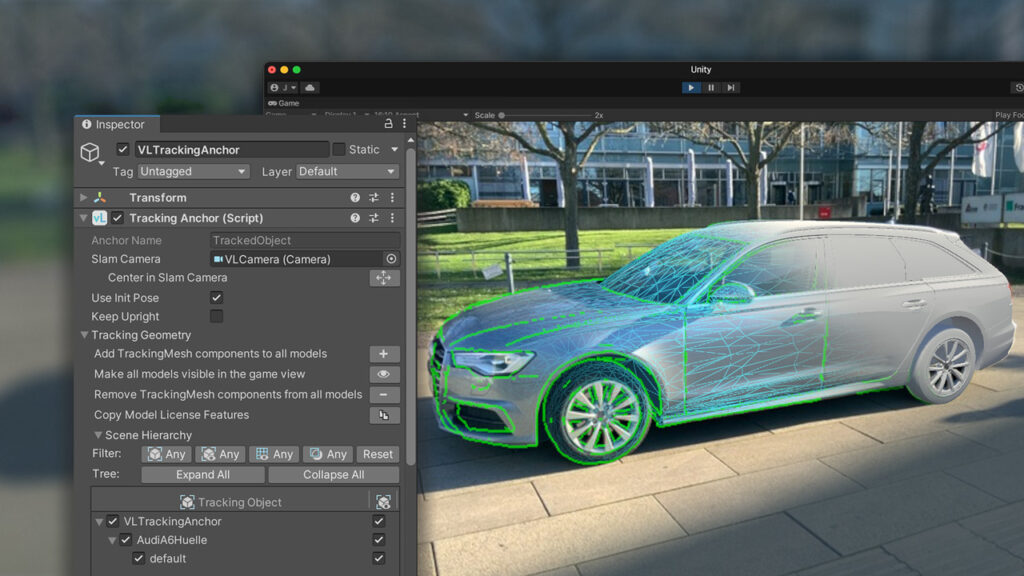

New Scene Setup

The new version eliminates the requirement for an external editor and manual modification of.vl configuration files by allowing you to configure the entire tracking in Unity.

The workflow is made even more simple with new shortcut features and buttons.

Models are now always loaded into VisionLib from Unity. Every model in Unity’s Hierarchy can be utilised for tracking in this way.

The result: The development of your AR applications is faster and less error-prone and you get results quickly. Furthermore, it is easier to integrate VisionLib into an already existing AR application.

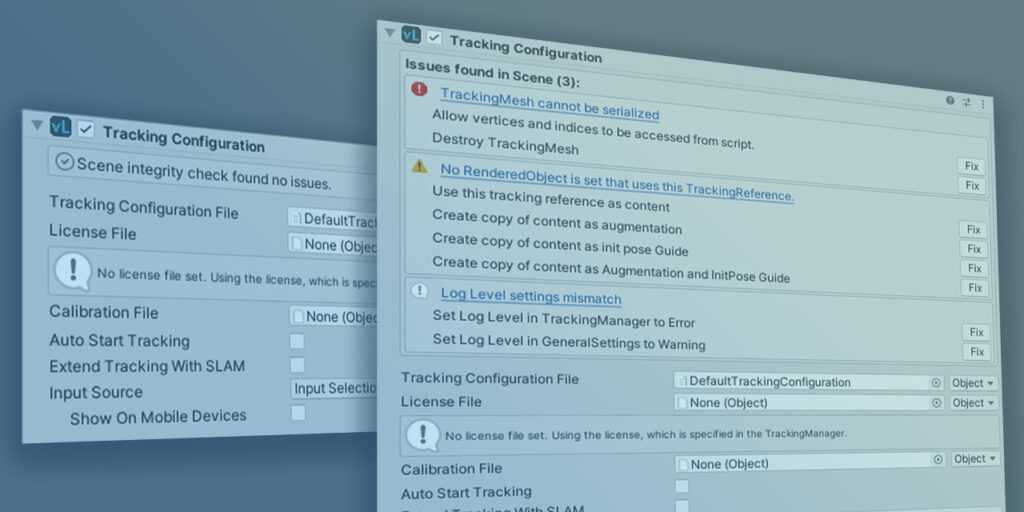

Scene Setup Validation

VisionLib verifies tracking setup data in Unity and offers recommendations for fixing issues.

The result: Debugging the tracking gets easier. Unexpected behavior and possible errors resulting from an incorrect scene setup are drastically reduced.

Advanced Compatibility & Flexibility

It is now possible to use Multi-Model Tracking with Image Injection, Model Injection, and textured models because all capabilities of VisionLib are now interoperable. Therefore, Multi-Model Tracking is compatible with all platforms and gadgets.

The result: The combination of features is now possible without any programming overhead.

Runtime Optimization

The performance of the SDK has been substantially enhanced by the optimization of many of the key activities that VisionLib does.

The result: The tracking is smoother and it has become easier to track objects with complex data.

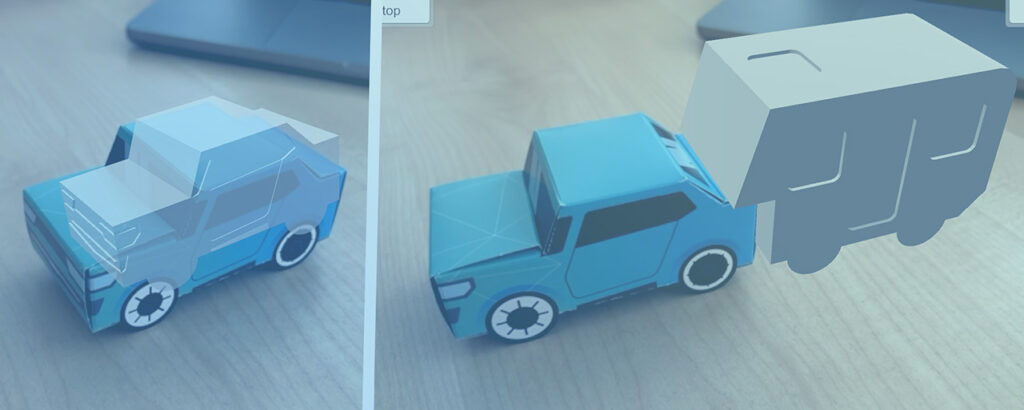

InitPose Guidance

Apps using augmented reality typically use distinct 3D models for augmentation and tracking. While VisionLib initializes and looks for the real object in the video stream, there are occasions when you wish to present the tracked model or some other guidance in advance.

It is now simpler to use and more centralized to set this “Init Pose Guidance” and regulate what is used for tracking and augmentation.

The result: There is now an explicit shortcut for controlling this in the TrackingAnchor component.

Init Pose Interaction

With the release of Version 3.0, we provided the ability for users to alter the InitPose while it is being used.

The result: If desired, the user can modify the initial pose without the need to move the mobile device around the physical object to match her/his view of the real object to the predefined initial pose.

VisionLib on Magic Leap 2

Now, HoloLens and Magic Leap 2 XR glasses may both be utilized with VisionLib.

The result: You can use VisionLib and its model tracking on two of the most powerful XR devices in the industry.

Click on the following link Metrologically Speaking to read more such blogs about the Metrology Industry.