Vision solutions give machines the ability to see and take actions to support or replace manual inspection tasks with the use of digital cameras and image processing This makes machine vision ideal for automated inspection and measurement tasks designed to improve product quality and optimize production yield, cost and throughput. Using 2-D and 3-D vision cameras, users can perform a wide range of applications where there is a need to measure, locate, inspect and identify parts and products. New technology, including artificial intelligence (AI) algorithms, makes vision systems more effective than ever before. Understanding the cameras, the AI algorithms and how the systems are trained shows how high-speed machine vision with AI can improve automated quality inspection.

Machine vision refers to the use of industrial cameras, lenses and lighting to perform automated visual inspection of manufactured products. The overall machine vision process includes planning the details of the requirements and project, and then creating a solution. During runtime, the process starts with imaging, followed by automated analysis of the image and extraction of the required information, performing an action based on image analysis results, or both.

The Inspector8 Series cameras like the 830 and 850 are SICK’s latest hardware models. Inspector8XX is the model series for both the Inspector850 series and the Inspector830 series. The 850 series is a higher resolution imager, which is available in both a five-megapixel and a 12-megapixel version. SICK originally released a five-megapixel Inspector830 and is in the process of releasing a one-megapixel version and a color version. The differences between the Inspector850 and the Inspector830 are form factor and resolution; the processor, imager and software for both are identical.

SICK’s previous generation hardware—the InspectorP6 Series cameras—ranges from the small one megapixel 611 to the four-megapixel 650s. Again, the differences are form factor and resolution. The company’s InspectorP611s and 621s are entry-level devices with fixed-focus optics. The new generation Inspector8XX camera hardware is migrating to full C-mount lenses and the higher-end resolution imagers.

When referring to resolution within vision systems, there is a distinction between sensor resolution and image resolution. The sensor resolution is fixed at five megapixels on the 830s and one megapixel on the 621s. Either the working distance or what full-point lens is being used will dictate the field of view. The image resolution is based on how big the view is. The smaller the field of view, the higher the image resolution will be. But the sensor resolution is still fixed at five megapixels for the 830s or one megapixel for the 621s.

Leveraging AI

Whether the camera is an entry level 611 or the newer 830 and 850 models, the entire portfolio is artificial intelligence (AI) capable; they can all run SICK’s AI networks.

The Inspector83x is designed with ease-of-use in mind thanks to on-device AI capabilities that can eliminate conventional machine vision complexities whenever changes to product designs or packaging are needed. Instead of spending hours on complex rule-based programing or calling in a machine vision specialist to troubleshoot, novice operators can add a new product example and the camera will learn by itself. It typically does not take more than 100 seconds to get started.

SICK introduced its first iterations of AI on the Inspector6 Series and the Inspector8 Series. The company came out with its first algorithms around 2020, which was called its classification networks. The next iteration included anomaly detection, and the following iteration was the ability to train directly on the device. Next to be released is an object detection and counting AI network.

The software behind it is scalable and identical from entry level 611 to the higher level 830s and 850s. The software for the user interfaces is the same as well. They are all AI capable and run the same AI networks. SICK particularly concentrated on the next generation of cameras with 830s and 850s by including an AI accelerator chip onboard a camera. Now, the cameras can run those AI networks—the classification anomaly networks—much faster.

The difference between running AI on the 6xx series cameras is speed. While that series can run AI, it may require 300 to 700 milliseconds of processing time, which is a processing eternity for automated line production. For example, on a bottling line, the image processing or running the networks would not be fast enough. But with the AI accelerator and the new hardware, the networks can actually run those eight to 12 times faster. AI networks can run directly on the 8xx series cameras in 20 to 40 milliseconds, which opens up the inline production like bottling or packaging. This capability of running a faster network opens up more user industries and applications.

Inspector83x application integration

Since the Inspector83x units are self-contained smart cameras, they don’t require a controller, back-end programmable logic controller (PLC), etc. to run on the networks. They do their own processing, analytics and input/output (I/O) handling. Whether the visual target is a consumer goods or logistics application, the inspections are done by the camera.

Integrating the cameras into an actual system is up to the user. SICK supports traditional fieldbuses like Profinet, EtherNet/IP and TCP/IP. The cameras can communicate with the different protocols regardless of the control system or PLC.

The cameras have traditional digital I/O handling as well. Applications can be as simple as doing an inspection and triggering an output to indicate pass or fail. However, if the user needs data and data integration into their control system, the aforementioned protocols are supported.

Although the cameras’ I/O is limited to seven inputs and five outputs, triggering and I/O handling capabilities are inherent. For example, a trigger sensor can be an input. An event can be a trigger for the camera to take an image. Or users could use some of the inputs for job or recipe management. Multiple independent configurations like a combination of inputs are possible. Jobs or recipes can be changed to different configurations. This functionality can be done using the command over Ethernet network. Other I/O can be used for conveyor tracking using encoder inputs.

With queued inputs, delayed outputs and encoder tracking, not only can the SICK Inspector83x cameras monitor exactly where on a conveyor belt an image is triggered, it can delay the output by a certain number of millimeters, for example, because that’s where the diverter mechanism is to divert the identified faulty product. This functionality also works well for inspected package tracking. It also can be done entirely on the camera without having to perform those tasks on a PLC.

On-device training

The first iteration of SICK’s AI training was through a cloud-based training environment outputting a neural network file that could be loaded onto the camera via the user interface. SICK’s dStudio is a cloud-based environment that enables users to upload their classification of images to a cloud. For example, if a user has 10,000 images of apples and oranges, they can create those two different classes in the dStudio cloud-based training network, then upload them to the SICK cloud. Users can sort through those images, click train and a half hour or so later, they get a JSON or a neural network file, which can be deployed and run directly on the camera.

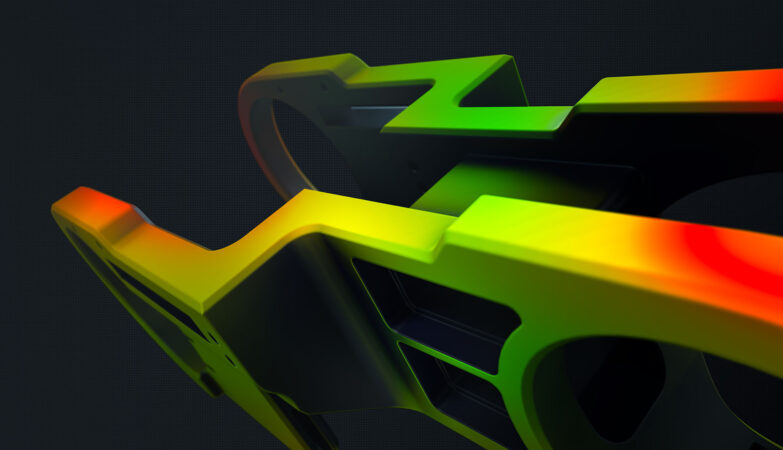

With SICK’s two new networks, with anomaly and classification on the device, instead of having to upload images to a cloud, the new networks have on-device training. For anomaly, simply open the anomaly tool, save up to 100 images of what a good product should look like and train it onto the device itself. It takes from a few seconds to a couple of minutes, depending on the number of images and the network intensity. It deploys and runs directly on the camera without having to upload anything. Images are captured, stored and trained directly on the device. Only good images are collected and the bad images are ignored.

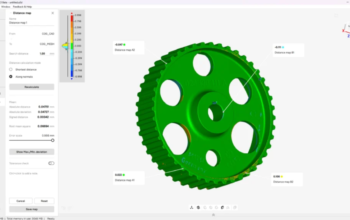

After training the network, it provides an anomaly score, which is based on the network training and the example images imported into the neural network or the training algorithm. The anomaly score is a number that indicates how many deviations or anomalies there are in the image compared to the reference images. From there, users can set an adjustable threshold, which can be modified through the user interface.

It’s not necessary to have the entire image. Sometimes, only a portion of an image is of importance. For example, users can create an AI network looking at a specific portion of a circuit board, then create another anomaly network looking at a different part of the circuit board. Instead of creating a giant network, having individual smaller inspection regions will yield more fine-tuned results, because smaller regions are being viewed instead of a large one. Hopefully, any anomalies will pop a little bit more if there are significant defects or a missing components.

The point is using AI tools as a time saver and efficiency tool that can help streamline user applications, or at least give them a good baseline of how well this is going to work. And they can do it within minutes.

Configuration user interface

Nova is the configuration user interface to SICK’s camera. It’s common across the entire portfolio of cameras. With the Nova environment, we’ve gotten away from software on a PC. Instead, users access a web browser interface. Entering the IP address of a camera into a browser brings up SICK’s SOPASair user interface, which is another name for Nova.

SOPASair is an application for mobile devices and browsers and is used for the parameterization, configuration, diagnostics and monitoring of many SICK sensors. SOPASair helps users find connected devices and establish connections. Other features include a modern and user-friendly interface, high-contrast mode, device catalog, device emulation and many more.

As previously mentioned, it is scalable throughout the whole portfolio. From entry level to higher end, users have the same look and feel, same code, same functionality, with the exception of resolution and processing times. This also piggybacks into SICK’s 3-D cameras.

Final thoughts

What’s most important is the demystifying of AI related to vision systems. Many think that AI is complex, or that it requires a vast amount of knowledge to deploy or run. That is not true today. Others are hesitant to use AI because it’s not the hard data that users typically get with traditional machine vision tools. But using a well-trained algorithm to do a prediction can be just as reliable as using tables of data. Thinking you need a PC or hundreds of thousands of images to train a network must give way to the realization that the ease of use of today’s vision solutions makes the entry to using AI algorithms accessible to an everyday user.

The reality is that, with just a handful of images trained directly on the device, it’s easy to reliably train an AI network in seconds. The entry into running, deploying and using AI has gotten to a point now where anybody can do it, and the network could actually be trained using five images and run very robustly. Accessibility, ease of use and the lower barrier of entry to using these more advanced technologies and algorithms has opened up possibilities for more users than ever before.