This article is written by George Schuetz, Mahr Federal Inc. and reproduced from https://www.mahr.de/en-us/Services/Production-metrology/Know-how/Gaging-Tips/Basics-of-Measurement-Gaging-Tips/?ContentID=110464

Many questions have to do with various “rules of thumb” that have been floating around the industry for ages. During this time, much has happened to standardize the measurement process and make it more reliable and repeatable. Through International, National and Industrial Organizations, procedures have been put in place to help ensure that the measurement process — and the verification of that process — is correct and followed every time.

Interestingly, most of these measuring processes are based on old rules of thumb that were around for many many years before they were studied, improved upon, and their concepts eventually incorporated into one or more of today’s standards. The thing to remember is that while these old “rules” may form the basis of today’s practice, they themselves are not necessarily the best practice. But when there is some shop floor part quality disaster going on, referencing some of these basics may save the day.

The granddaddy of them all is the ten-to-one rule, which most likely came out of early manufacturing, turned into a military standard, and then evolved into some of the standards used today. Its basis still makes sense when making those basic setup decisions that put good gages together.

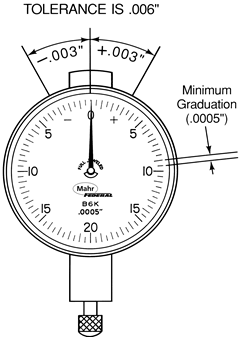

For example, in gages using analog or digital readouts, the rule says the measuring instrument should resolve to approximately 1/10 of the tolerance being measured. This means that if the total tolerance spread is 0.0002 in. (i.e., ±0.0001 in.), the smallest increment displayed on the gage should be 20 µin. A gage that only reads to 50 µin. can’t resolve closely enough for accurate judgments in borderline cases, and doesn’t allow for the observation of trends within the tolerance band. On the other hand, a digital gage that resolves to 5 µin. might give the user the impression of excessive part variation as lots of digits go flying by when using the display. On the other, other hand, 10:1 is not readily achievable on some extremely tight tolerance applications — say, ±50 µin. or less — and it may be necessary to accept 5:1. But for coarse work, 10:1 or something very close to it is always a good recommendation.

Without a doubt, the most common question I am asked has to do with selecting a gage: “I’ve got a bushing with a .750″ bore that has to hold ± 0.001 in. What kind of gage should I use?” There are a number of choices: a dial bore gage, an inside micrometer, an air plug, a self-centralizing electronic plug like a Dimentron®, or any one of several other gages. But picking the right gage for an application depends basically on three things: the tolerance you are working with; the volume of components you are producing; and the degree of flexibility you require in the gaging system.

For gage performance, we go back to our ten-to-one rule: if your tolerance is ±0.001 in., you need a gage with a performance rating of at least ten times that, or within one tenth (±0.0001 in.). A gage GR&R (Gage Repeatability and Reproducibility) study may be a way of determining the gage performance. The gage you pick should pass your own in-house GR&R requirements. GR&R studies are designed to show how repeatable that specified accuracy is when the gage is used by a number of operators, measuring a number of parts in the manufacturing environment. There is no single standard for GR&R studies, but generally, it is a statistical approach to quantifying gage performance under real life conditions. Often this is expressed as the ability to measure within a certain range a certain percent of the time. As “10%” is a commonly quoted GR&R number, it should be noted that this is quite different from the traditional ten-to-one rule of thumb — though it may be a long ago basis for it.

Typically, the rule of thumb for selecting a master has been to choose one whose tolerance is 10 percent of the part tolerance. This, combined with the gage’s performance, should provide adequate assurance of a good measurement process. It’s usually not worthwhile to buy more accuracy than this “ten-to-one” rule: it costs more; it doesn’t improve the accuracy; and the master will lose calibration faster. On the other hand, when manufacturing to extremely tight tolerances, you might have to use a ratio of 4:1 or even 3:1 between gage and standard, simply because the master cannot be manufactured and inspected using a 10:1 rule.

These are three short examples of where a rule of thumb may be the basis for a gage decision. It is not necessarily the final decision, but provides a way of working towards the best choice based on accepted test processes. There are many other rules — relating to surface finish checking and gage design — that are also interesting to review.

Minimum Graduation Value — value of the smallest graduations marked on the dial indicator. The rule is to select the one that is closest to 10% of the tolerance spread of the work you are measuring. This assures that the tolerance will span about ten divisions of the dial.